Visual Attention applied to Object Recognition

Eye-tracking and attention visualization for object recognition

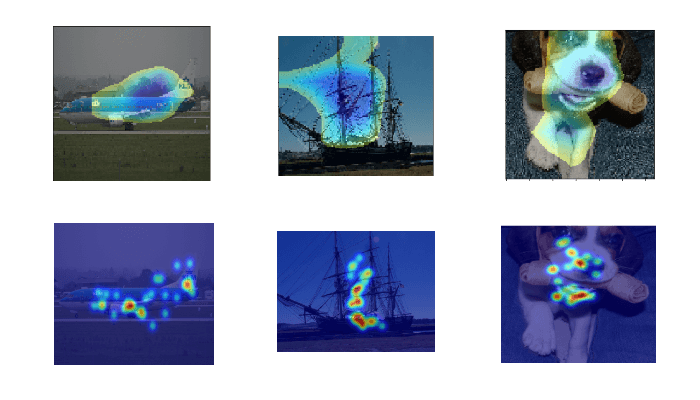

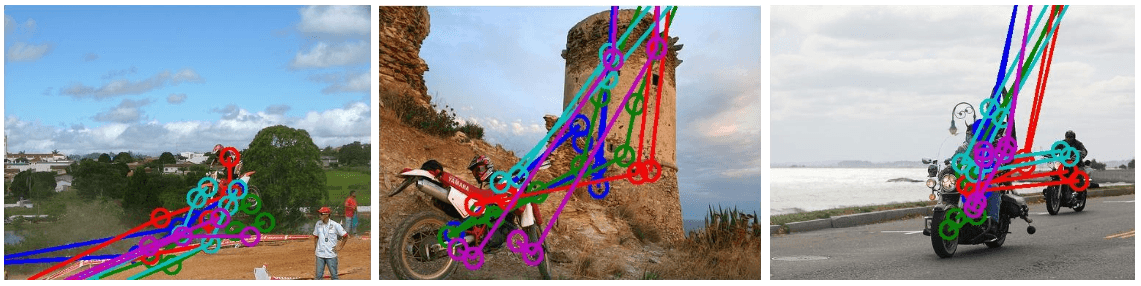

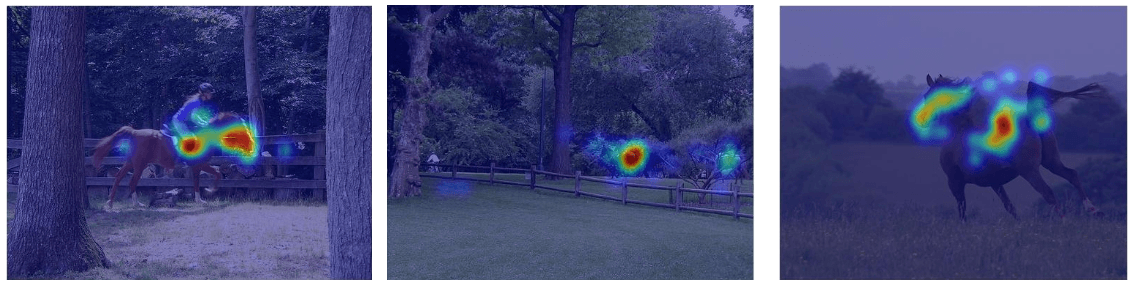

Comparing CNN attention with human eye-tracking.

Project description

The aim of the project is to compare how Convolutional Neural Networks and Humans see the world by comparing where computer and human pay attention to during an object recognition task.

Overview

Due to the rise of Visual Attention mechanisms in machine learning it is now possible to model the cognitive process of paying attention in a computer. For a Cognitive Science course project we decided to see if the computer will choose to look at the same thing as a human does to recognize objects of 10 categories and if human eye-tracking data can be used to speed up the process of training the machine learning algorithm.

The project is part of a Cognitive Science course at the University of Copenhagen.

Technical Details

We use the POET dataset that provides eye-tracking data from multiple annotators that classified over 6 thousand images into 10 categories.

The project is divided into multiple parts that explore different training environments and relationships between the machine and the human attention:

Results

The final report can be found here. My work can be found under sections about developing the attention visualization based on gradient theory and comparing CAM, soft attention and human attention.

My contribution

I came up with the idea for the project and devised the approach. I proposed a novel approach to visualizing human attention from eye-tracking data using gradient theory.

I developed a soft attention model in Tensorflow and extended an open-source CAM model. I visualized both attention mechanisms and compared them to human attention using PCC.